views

The rapid evolution of artificial intelligence has ushered in a new era of intelligent solutions, but the real challenge lies beyond model development it's in deploying, managing, and scaling AI effectively. Enter MLOps services, the backbone of operational AI success. For leading tech innovators like Tkxel, dynamic MLOps strategies are not just a support mechanism they're a catalyst for real-time value generation.

In this deep-dive, we explore how MLOps services are revolutionizing AI deployment, enabling faster iteration, reduced operational friction, and sustained scalability. Whether you're a startup or an enterprise, aligning your AI strategy with MLOps is no longer optional it's mission-critical.

What Are MLOps Services and Why Do They Matter?

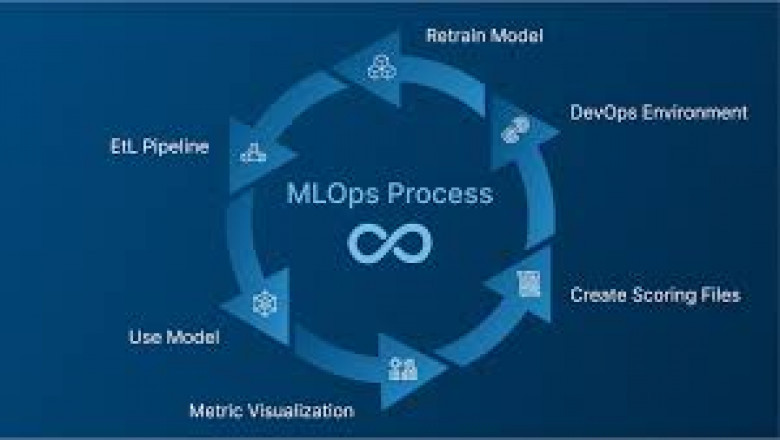

MLOps, short for Machine Learning Operations, is a set of practices that bring DevOps principles to machine learning. This means integrating ML systems into production in a repeatable, efficient, and scalable way.

MLOps services include:

-

Model versioning

-

Automated retraining pipelines

-

CI/CD for machine learning

-

Real-time model monitoring

-

Drift detection and rollback systems

By embedding these components, businesses ensure that AI models deliver consistent performance and adapt to changing data environments.

The Role of MLOps Services in Modern AI Deployment

Deploying an AI model in a test environment is easy. Operationalizing it across real-world applications is not. That’s where MLOps services come in.

Here’s how MLOps bridges the gap between innovation and implementation:

-

Automation: Eliminates repetitive manual processes, accelerating time to deployment.

-

Reproducibility: Ensures that models perform consistently across environments.

-

Governance: Tracks model lineage, performance metrics, and data provenance.

-

Adaptability: Supports frequent updates and retraining to keep models relevant.

Streamlining Model Lifecycle Management

Managing the lifecycle of an ML model from training to deployment to monitoring is complex. MLOps introduces structured pipelines to automate this journey.

Lifecycle benefits include:

-

Reduced human error

-

Enhanced collaboration between data scientists and engineers

-

Real-time performance analytics

-

Continuous improvement loops

When powered by MLOps services, organizations gain the agility to deploy, evaluate, and refine models in weeks instead of months.

Scaling AI Projects with Confidence

Scaling AI means moving from experimental notebooks to robust, production-grade systems. MLOps makes this transition seamless by:

-

Standardizing development processes

-

Enabling scalable infrastructure using Kubernetes and containers

-

Automating model deployment across cloud, edge, and on-prem environments

With MLOps, AI projects become enterprise-ready—capable of handling diverse workloads and rapid demand shifts.

Data Quality and Pipeline Integrity: The Hidden Heroes

No model is better than the data that feeds it. MLOps ensures high data integrity through:

| MLOps Feature | Impact on Data Quality |

|---|---|

| Data versioning | Tracks changes and enables rollback |

| Validation rules | Catches errors before training |

| Feature stores | Reuses and governs feature engineering |

| Pipeline orchestration | Ensures data freshness and completeness |

The result? Models that are trained on reliable, up-to-date, and well-governed datasets.

Real-Time Monitoring and Model Drift Detection

One of the critical challenges in AI deployment is drift—when a model’s accuracy degrades over time due to changing data. MLOps services provide:

-

Model performance dashboards

-

Alert systems for anomalies or threshold breaches

-

Automated retraining triggers

-

Audit logs for compliance

These capabilities ensure that your AI doesn’t just launch it thrives and adapts.

Security, Compliance, and Ethical Deployment

AI governance is more than technical performance it’s also about trust, compliance, and fairness. MLOps integrates safeguards like:

-

Access controls and audit trails

-

Compliance with standards like GDPR and HIPAA

-

Bias detection and mitigation tools

-

Secure data pipelines with encryption and tokenization

MLOps services help businesses deploy AI responsibly earning stakeholder and public trust.

How MLOps Services Boost Cross-Functional Collaboration

The success of AI doesn’t rest solely on data scientists. MLOps fosters synergy between:

-

Data scientists, who build models

-

ML engineers, who deploy and maintain them

-

IT teams, who provide infrastructure

-

Business leaders, who drive outcomes

This collaboration is enabled through shared tools, clear communication protocols, and centralized platforms provided by MLOps frameworks.

The ROI of Investing in MLOps Services

While MLOps may seem like a technical layer, it directly contributes to business KPIs:

| Metric | Before MLOps | After MLOps |

|---|---|---|

| Time to deployment | 3–6 months | 2–4 weeks |

| Model failure rate | High | Significantly lower |

| Compliance issues | Frequent | Rare |

| Data science productivity | Fragmented | Streamlined |

Simply put, MLOps pays for itself in efficiency, scalability, and peace of mind.

Why Choose Tkxel’s MLOps Services?

As a leader in digital transformation, Tkxel offers comprehensive MLOps solutions tailored to real-world business needs. Their services include:

-

End-to-end model lifecycle management

-

Secure cloud and hybrid infrastructure

-

Integrated CI/CD and monitoring pipelines

-

Customizable governance and compliance solutions

Tkxel's MLOps services are built to help organizations transition from AI exploration to enterprise-grade execution.

FAQs

What is the main benefit of using MLOps services?

MLOps services streamline the deployment, monitoring, and management of machine learning models, making them more reliable, scalable, and production-ready.

Can MLOps help with compliance and data governance?

Yes. MLOps frameworks include tools for tracking model lineage, ensuring data security, and maintaining audit trails for regulatory compliance.

Is MLOps only useful for large enterprises?

Not at all. Startups and mid-sized companies benefit greatly by reducing deployment costs and accelerating time to market with reliable processes.

How do MLOps services support model retraining?

MLOps enables automated pipelines that retrain models based on triggers such as data drift or performance degradation, keeping models fresh and accurate.

What tools are commonly used in MLOps services?

Popular tools include MLflow, Kubeflow, Airflow, TensorFlow Extended (TFX), and cloud-native solutions from AWS, Azure, and GCP.

Can MLOps be integrated with existing DevOps pipelines?

Absolutely. MLOps extends DevOps practices to machine learning workflows, allowing for unified deployment and monitoring strategies.

Conclusion

AI innovation is booming but without scalable deployment, its value remains locked. That’s where MLOps services come into play. By automating lifecycles, ensuring data integrity, enabling real-time monitoring, and fostering cross-functional collaboration, MLOps turns experimentation into execution. With partners like Tkxel, businesses can confidently take AI from the lab to the world faster, safer, and smarter.

Comments

0 comment